TARC: Time-Adaptive Robotic Control

Abstract

Fixed-frequency control in robotics imposes a trade-off between the efficiency of low-frequency control and the robustness of high-frequency control, a limitation not seen in adaptable biological systems. We address this with a reinforcement learning approach in which policies jointly select control actions and their application durations, enabling robots toautonomously modulate their control frequency in response to situational demands. We validate our method with zero-shot sim-to-real experiments on two distinct hardware platforms: a high-speed RC car and a quadrupedal robot. Our method matches or outperforms fixed-frequency baselines in terms of rewards while significantly reducing the control frequency and exhibiting adaptive frequency control under real-world conditions.

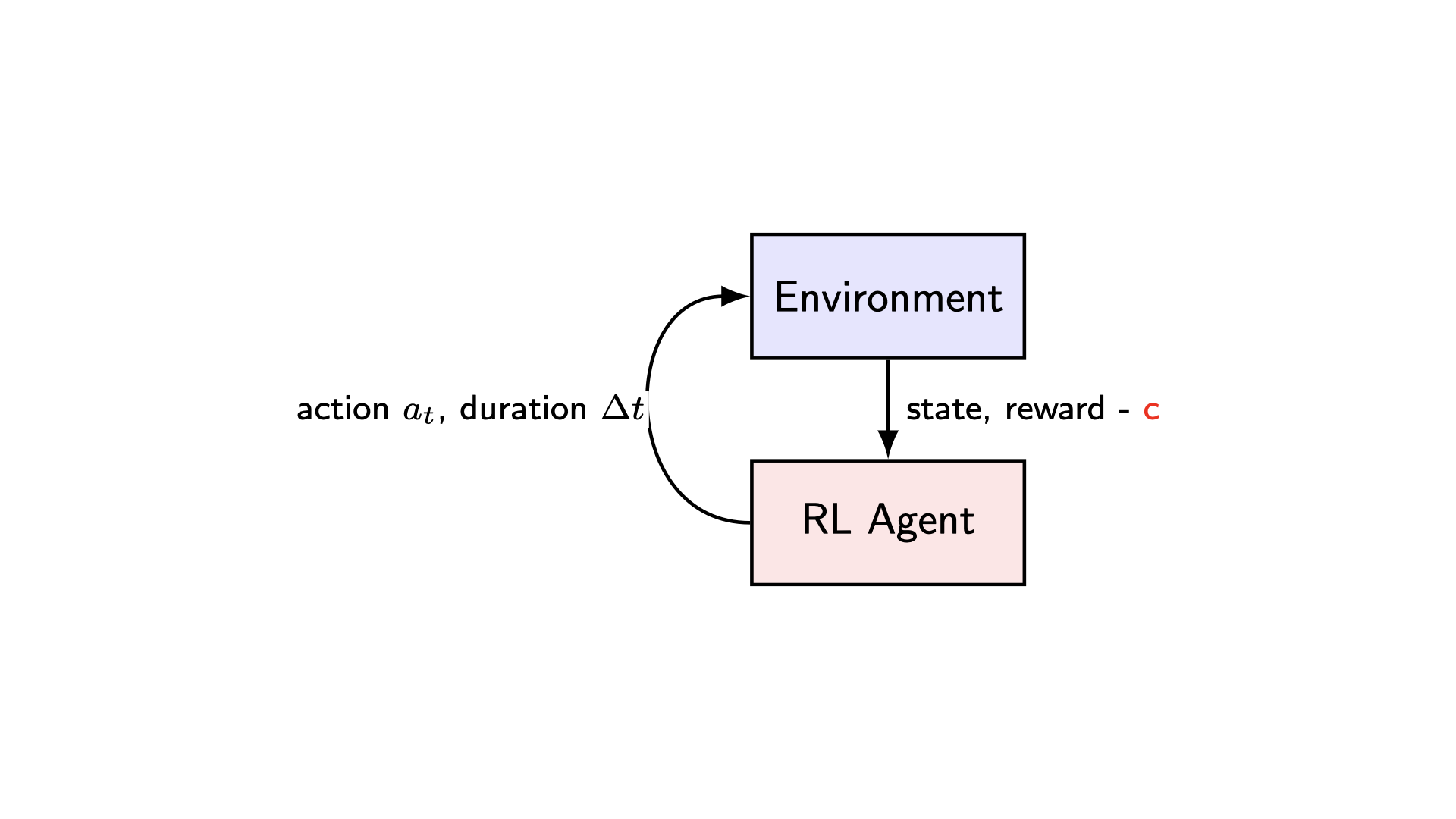

Overview

Overview of the TARC Architecture. TARC allows policies to learn both an action and its according application duration, allowing the agent to implicitly select its own control frequency. To encourage low-frequency control where adequate, the environment returns a penalized reward (with a cost c) to the agent such that it learns a trade-off between applying high-frequency and low-frequency control.

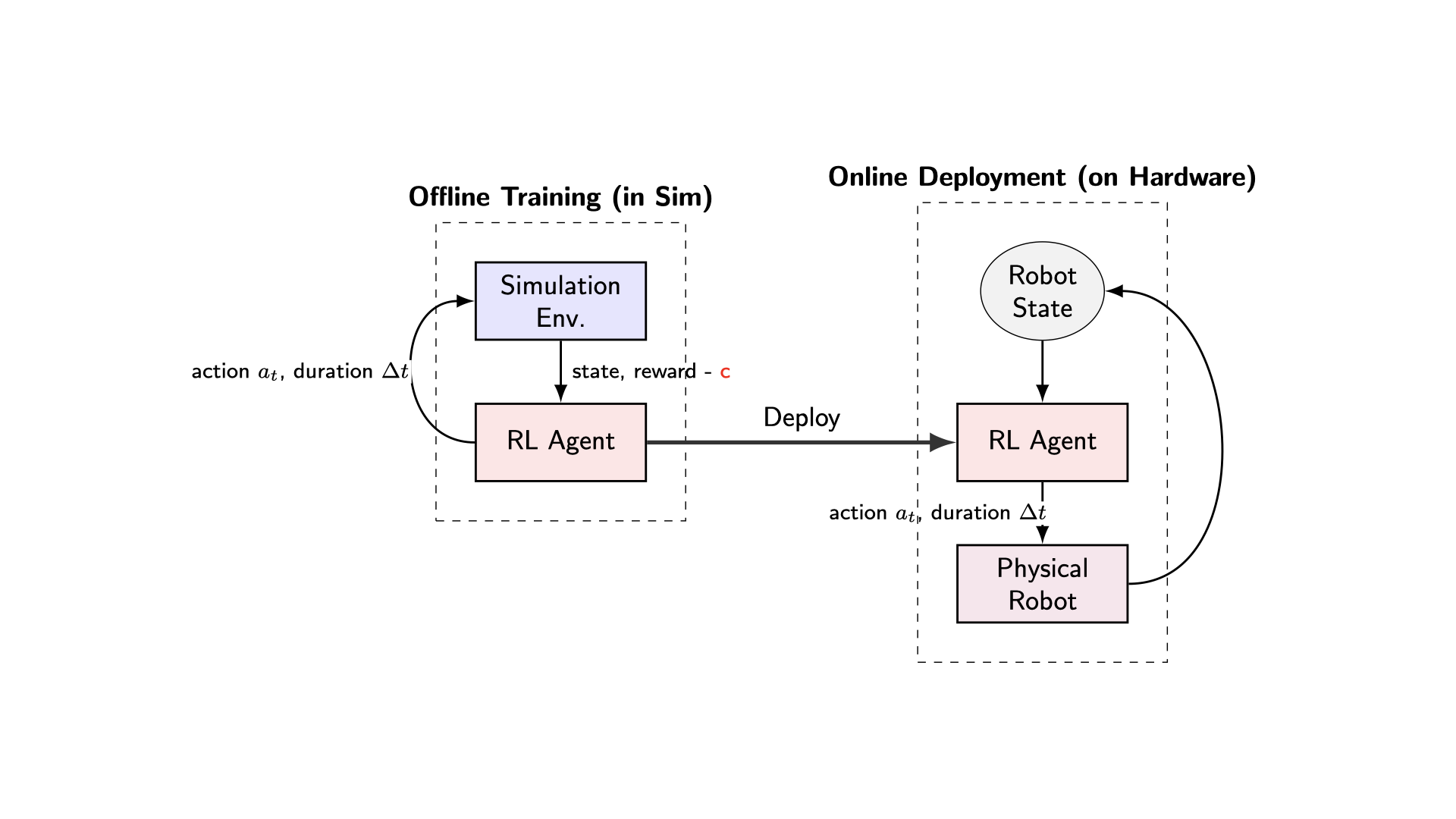

Method

Overview of our Deployment setup. We validate TARC through zero-shot sim-to-real deployment on two distinct robotic platforms. Policies are learned entirely offline with the help of simulators and deployed zero-shot on to the hardware without further fine-tuning.

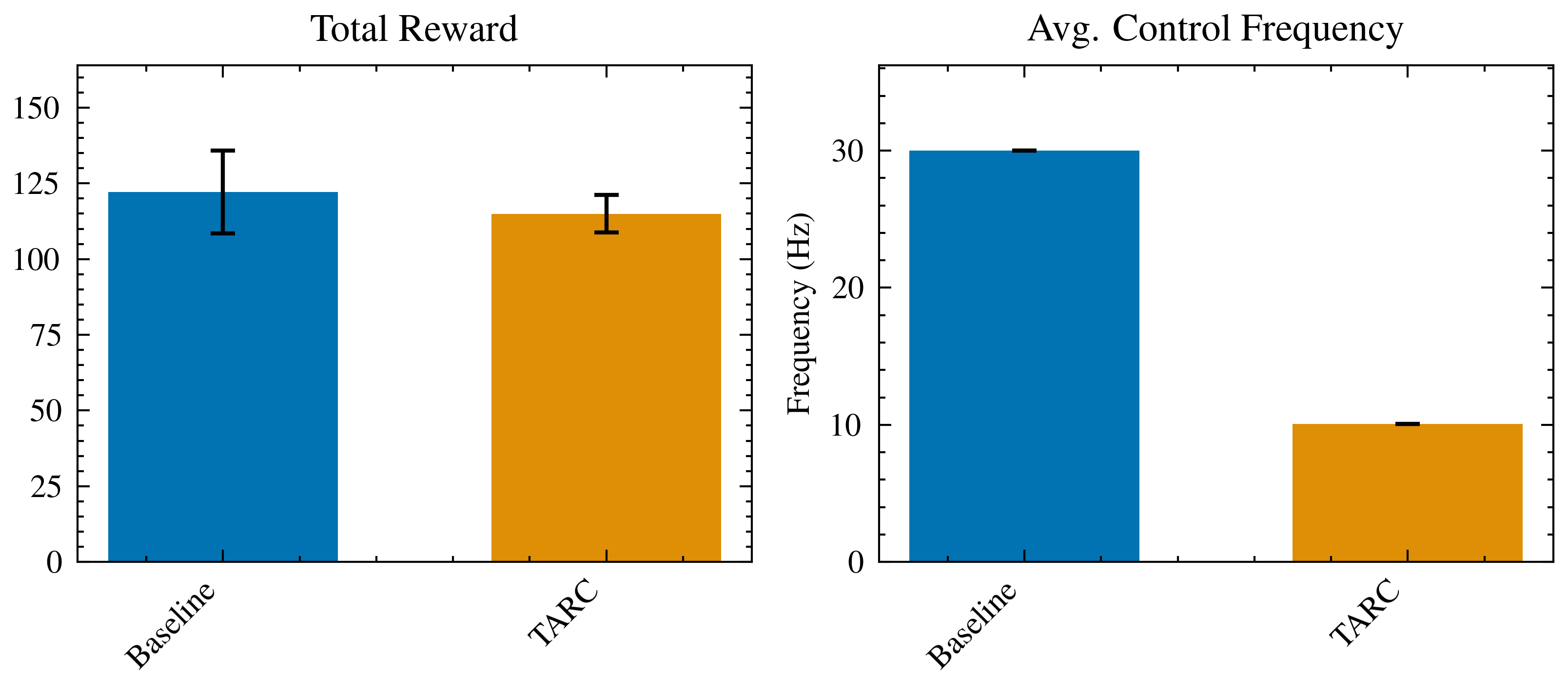

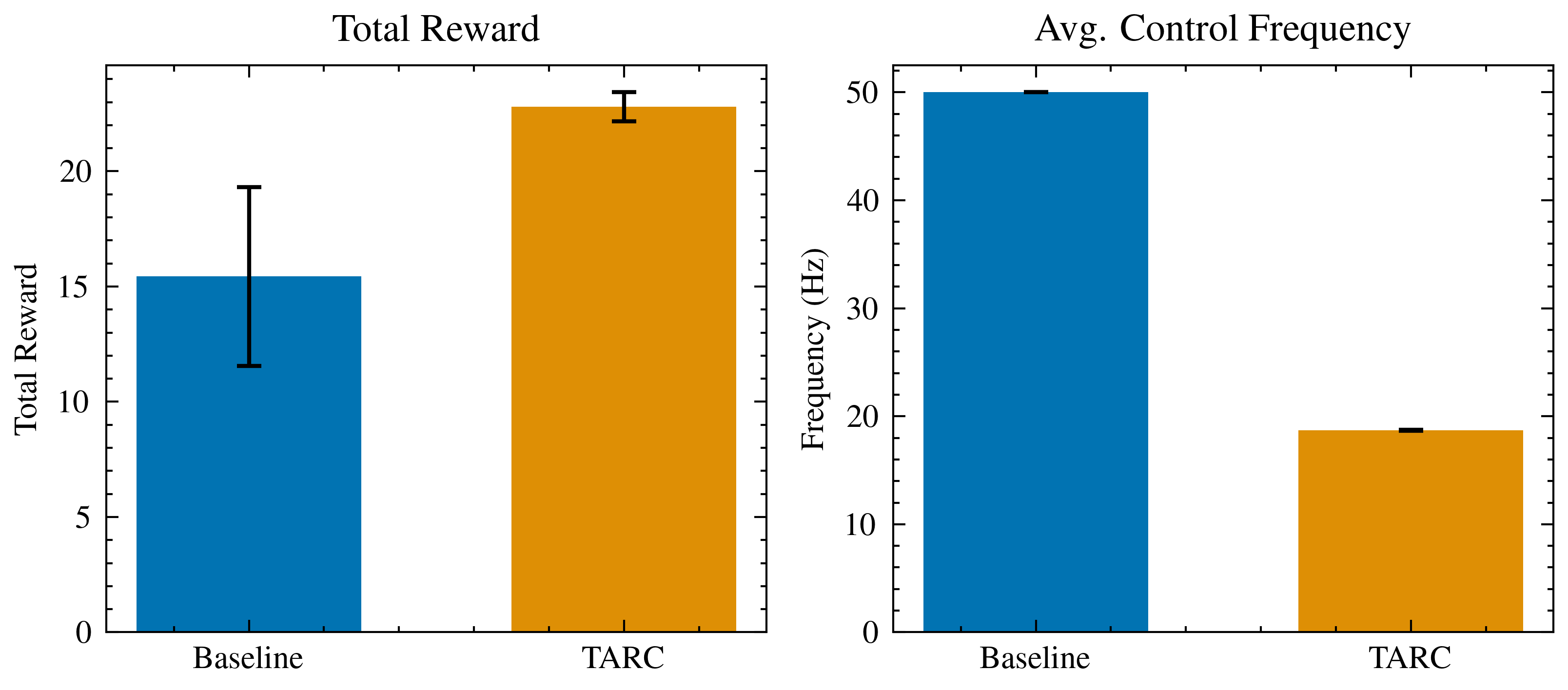

Results

Quadrupedal locomotion

Run Then Turn Scenario

Applying perturbations

RC Car